A question that is often asked in Drupal circles, is how much can a Drupal site scale to, and what is the hardware necessary to make it do that. The answers often go on tangents, with some advocating multiple web boxes, with a reverse proxy in front of them, and multiple master/slave MySQL boxes too, like we have on Drupal.org.

The real answer is : it depends ...

Depends on what? Many things, like:

- How many and what modules do you have on the site?

- Is your traffic mainly anonymous or logged in users?

- The hardware you have.

- The software configuration you have.

While there is no canned answer, there are plenty of things you can do to increase performance.

Here is a case study of a site that can handle a million page views a day, including a Digg front page.

Normal traffic pattern

This is the normal traffic patter for this site. The weekends are low traffic, but weekdays are busy. Monday is the busiest day of the week, where people login from work to catch up on the content that was posted over the weekend.

On a busy day, the site does close to 880,000 page views per day.

The site does around 17 to 18 million page views a month.

Much of the traffic on this site is by logged in users,

checking new content, commenting on it, and creating their own node.

Day ---Date---- Visits Page Views ---Hits-- Bandwidth Mon 25 Feb 2008 53,636 879,777 7,636,793 90.69 GB Tue 26 Feb 2008 53,636 876,492 7,446,068 85.82 GB Wed 27 Feb 2008 52,552 818,191 7,034,696 80.03 GB ... Sat 01 Mar 2008 29,999 324,972 2,781,091 33.05 GB Sun 02 Mar 2008 33,709 431,533 3,518,855 42.37 GB ... Mon 03 Mar 2008 49,683 865,697 7,429,576 85.27 GB Tue 04 Mar 2008 50,981 831,947 6,990,756 80.63 GB Wed 05 Mar 2008 51,907 831,332 7,162,797 81.47 GB

On Digg's front page

When this site recently got on Digg's front page, it was on a Sunday, but the long tail was well into Monday. This caused Monday's traffic to be 985,000 page views, with double the normal visits.

If the site was on Digg on a weekday, the 1 million page per view mark would have been broken easily.

Since Digg's traffic is all anonymous, caching protects well against that.

Day ---Date---- Visits Page Views ---Hits-- Bandwidth Sun 09 Mar 2008 98,984 505,852 5,978,013 80.99 GB Mon 10 Mar 2008 111,201 985,229 10,215,878 127.62 GB Tue 11 Mar 2008 58,146 818,198 7,442,787 86.90 GB Wed 12 Mar 2008 55,531 807,695 7,099,067 79.75 GB

Server Configuration

The site is on a single server, with all the LAMP stack running on it. It has dual Quad core Xeons (8 cores total), and 8GB or memory with 64bit Linux (Ubuntu server).

There is no reverse proxy.

There is no InnoDB.

There are no multiple boxes.

The video content from the site is served from another box, but all the images are still on the same box.

We use memcache without the database caching.

We use a few custom performance patches (alias whitelist, last access time is written every 5 minutes only not every page view).

More importantly, the site has only 46 modules, as opposed to the 80-120 we find on sites these days.

Server Resource Utilization

During the Digg, it was interesting to see resource utilization. Here are some graphs to illustrate.

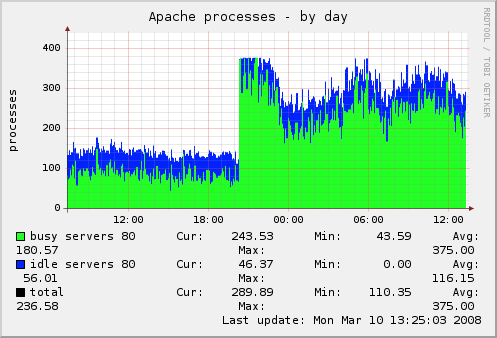

This is the Apache number of processes. You can see how it jumped up to reach the MaxClients limit of 375 briefly. This is a safety mechanism to avoid swapping which can kill the server, at the expense of queuing some users.

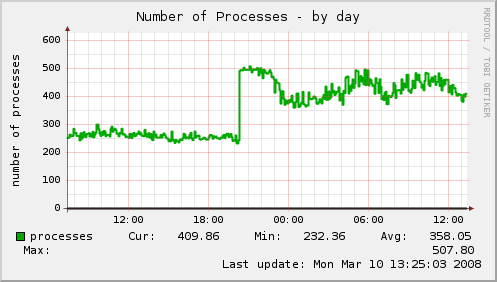

This is the total number of processes in the server, mainly Apache processes.

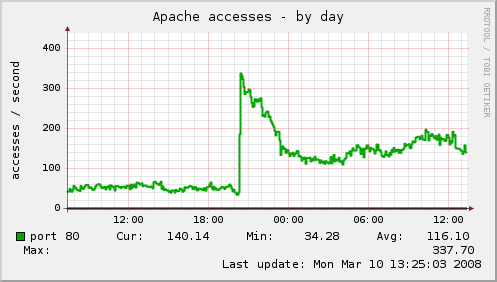

This is the number of access (i.e. hits not pages!) per second that Apache is serving.This is around 340 per second, as opposed to the normal of 150 per second.

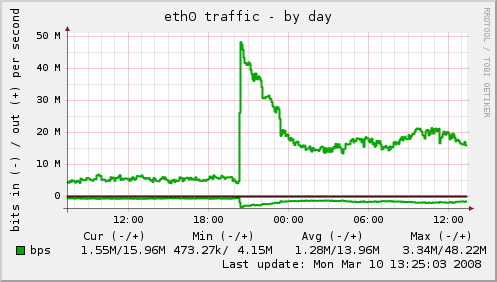

This is the number of bits going in and out of the internet ethernet facing port (eth0).

Update April 10, 2008:

It's official: the site broke the 1,000,000 page view per day mark without being on Digg.

07 Apr 2008 59294 960453 8240531 95.76 GB 08 Apr 2008 58803 1007068 8384384 104.53 GB 09 Apr 2008 60346 987919 7856087 95.30 GB

Update July, 2010:

After almost 2.5 years, we at 2bits.com have dealt with even larger sites.

Please check our presentation on a site that does 2.8 million page views per day, 70 million per month, one server!.

That site broke the record later, and did 3.4 million page views in one day, and 92 million in one month.

Comments

Visitor (not verified)

CPU/memory/etc.?

Wed, 2008/06/25 - 13:59I'm curious what the CPU, memory, etc. looked like during these peaks. Any chance of seeing those graphs added?

thanx!

Khalid

Not much to say ...

Wed, 2008/06/25 - 15:13That site regulary breaks the 1,000,000 page view per day barrier now, a couple of times per week. The highest so far is 1.091 million page views (7.97 million hits).

However, the CPU and memory graph do not show anything out of the ordinary, other than the usual daily fluctuation of peak hours. CPU has a maximum of 191% usr + 129% sys, average of 83% usr + 17% sys. This is out of 800% because Munin sees this as an 8 core system. For memory 2.29GB average and 4.09GB maximum (out of 8GB total RAM in the box.

The only time we see noticeable spikes is when the site is on Digg's front page. Again, this is all anonymous traffic, so memcache really helps. We see the spike in number of Apache accesses, and number of Apache processes (up to the MaxClients limit), and of course memory to go with them. But not much else.

Heavy tuning has been done on that site, but the main ones are a patch for URL alias whitelisting, using tracker2 and most importantly, memcache with several bins.

Hope this helps.

--

2bits -- Drupal and Backdrop CMS consulting

Visitor (not verified)

bravo

Wed, 2008/10/01 - 01:06thanks for posting and sharing this excellent information!

Visitor (not verified)

How do you get these

Tue, 2008/12/02 - 13:48How do you get these stats:

Day ---Date---- Visits Page Views ---Hits-- Bandwidth

Mon 25 Feb 2008 53,636 879,777 7,636,793 90.69 GB

Tue 26 Feb 2008 53,636 876,492 7,446,068 85.82 GB

Wed 27 Feb 2008 52,552 818,191 7,034,696 80.03 GB

I'd like to get simple text stats like that, but all I know how to do is use webalizer, awstat and the like..

Khalid

Awstats

Tue, 2008/12/02 - 13:58This is from Awstats.

Visitor (not verified)

Google Analytics

Mon, 2009/01/26 - 13:26Can Google Analytics provide such information?

Day ---Date---- Visits Page Views ---Hits-- Bandwidth

Mon 25 Feb 2008 53,636 879,777 7,636,793 90.69 GB

Tue 26 Feb 2008 53,636 876,492 7,446,068 85.82 GB

Wed 27 Feb 2008 52,552 818,191 7,034,696 80.03 GB

...

Sat 01 Mar 2008 29,999 324,972 2,781,091 33.05 GB

Sun 02 Mar 2008 33,709 431,533 3,518,855 42.37 GB

...

Mon 03 Mar 2008 49,683 865,697 7,429,576 85.27 GB

Tue 04 Mar 2008 50,981 831,947 6,990,756 80.63 GB

Wed 05 Mar 2008 51,907 831,332 7,162,797 81.47 GB

Khalid

No

Mon, 2009/01/26 - 14:12No. Use awstats for that kind of statistics.

Visitor (not verified)

Wonderful information

Thu, 2009/08/06 - 10:07I was wondering how much load my server, with same stats as yours can handle.

You provided invaluable information for me, thanks

Visitor (not verified)

Site url?

Sat, 2009/09/19 - 11:06First of all thanks for the great article!

I'm wondering if it is possible to post a complete list of modules used and a site url?

Visitor (not verified)

can u give me the URL of the

Wed, 2009/11/04 - 17:02can u give me the URL of the website that is discuss in this article,

I just want to see how kind of website it is and hopefully, I can learn from it.

thx

Pages