One of the suboptimal techniques that developers often use, is a query that retrieves the entire content of a table, without any conditions or filters.

For example:

SELECT * FROM table_name ORDER BY column_name;

This is acceptable if there are not too many rows in the table, and there is only one call per page view to that function.

However, things start to get out of control when developers do not take into account the frequency of these calls.

Here is an example to illustrate the problem:

A client had high load average (around 5 or 6) on their server which had around 400 logged in users at peak hours. The server was somewhat fragile with any little thing, such as a traffic influx, or a misbehaved crawler, causing the load to go over 12.

This was due to using an older version of the Keyword Links module.

This old version had the following code:

This caused certain keywords to be replaced when a node is being displayed:

function keyword_link_nodeapi(&$node, $op, $teaser, $page) {

if ($op == 'view' && ...

$node->content['body']['#value'] = keyword_link_replace(...);

}

}

And this caused keyword replacement for each comment as well.

function keyword_link_comment(&$a1, $op) {

if ($op == 'view') {

$a1->comment = keyword_link_replace($a1->comment);

$node->content['body']['#value'] = keyword_link_replace(...);

}

}

The function that replaced the content with keywords was as follows:

function keyword_link_replace($content) {

$result = db_query("SELECT * FROM {keyword_link} ORDER BY wid ASC");

while ($keyword = db_fetch_object($result)) {

...

$content = preg_replace($regex, $url, $content, $limit);

}

return $content;

}

Which executes the query every time, and iterates through the result set, replacing words.

Now, let us see how many rows are there in the table.

mysql> SELECT COUNT(*) FROM keyword_link; +----------+ | count(*) | +----------+ | 2897 | +----------+ 1 row in set (0.00 sec)

Wow! That is a relatively large number.

And Eureka! That is it! The query was re-executed every time the replace function was called!

This means in a list of nodes of 50 nodes, there would be 50 queries!

And even worse, for a node with tens or hundreds of comments, there would be tens or hundreds of queries as well!

Solution

The solution here was to upgrade to the latest release of the module, which has eliminated the replacement of keywords for comments.

But a better solution, preserving the functionality for comments, is a two fold combined solution:

Use memcache as the cache layer

By using memcache, we avoid going to the database for any caching. It is always a good idea in general to have that, except for simple or low traffic sites.

However, on its own, this is not enough.

Static caching for cache_get() result

By statically caching the results of the query, or the cache_get(), those operations are executed once per page view, and not 51 times for a node displaying comments. This is feasible if the size of the dataset is not too huge. For example, for this site, the size was around 1.3 MB for the three fields that are used from that table, and fits in memory without issues for each PHP process.

This is the outline for the code:

function keyword_link_replace($content) {

static $static_data;

if (!isset($static_data)) {

if (($cache = cache_get('keyword_link_data')) &&

!empty($cache->data)) {

$static_data = $cache->data;

foreach($cache->data as $keyword) {

replace_keyword($keyword, $content);

}

}

else {

$result = db_query("SELECT * FROM {keyword_link} ORDER BY wid ASC");

$data = array();

while ($keyword = db_fetch_object($result)) {

$data[] = $keyword;

replace_keyword($keyword, $content);

}

$static_data = $data;

cache_set('keyword_link_data', $data, 'cache');

}

}

else {

foreach($static_data as $keyword) {

replace_keyword($keyword, $content);

}

}

return $content;

}

You can download the full Drupal 6.x version with the fixes from here.

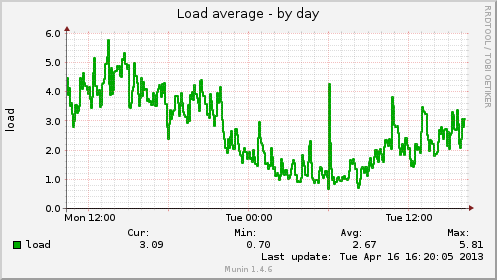

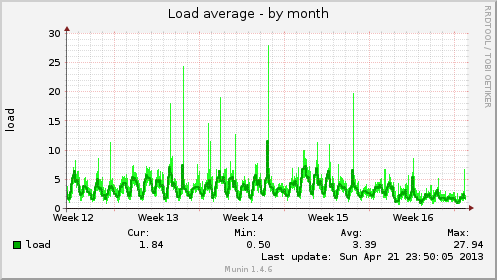

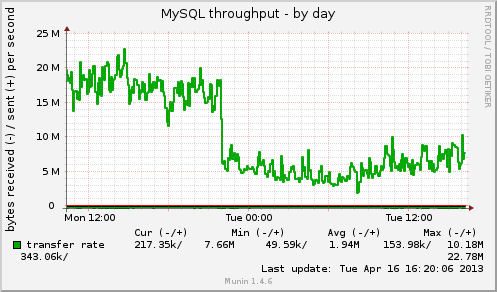

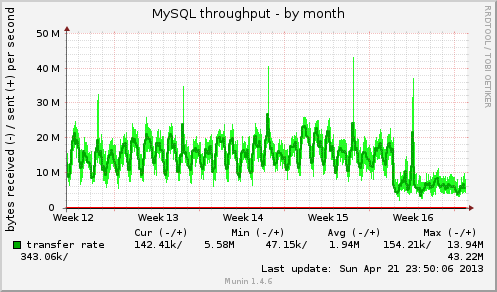

What a difference a query makes

The change was done at 22:00 in the daily graphs, and 6 days before the monthly graph was taken. You can see the difference in that the load average is less. Ranging between 1.8 and 2.4 for most of the day, with occasional spikes above 3. This is far better than the 5 or 6 load before the fix. Also the amount of data that is retrieved from MySQL is halved.

As you will notice, no change was seen in the number of SQL queries. This is probably because of the effect of MySQL's query cache. Since all the queries were the same for the same page, it served the result from the query cache, and did not have to re-execute the query for the tens or hundreds of times per page. Even though the query cache saved us from re-executing the query, there still is overhead in getting that data from MySQL's cache to the application, and that consumed CPU cycles.

Faster Node displays

And because we are processing less data, and doing less regular expression replacement, node display for nodes that have lots of nodes has improved. With a node that had hundreds of comments, and 50 comments shown per page, the total page load time was 8,068 milliseconds.

The breakdown was as follows:

keyword_link_replace() 51 calls totalling 2,429 ms

preg_replace() 147,992 calls totalling 1,087 ms

mysql_fetch_object() 150,455 calls totalling 537 ms

db_fetch_object() 150,455 calls totalling 415 ms

mysql_query() 1,479 calls totalling 393 ms

unserialize() 149,656 calls totalling 339 ms

A total of 5,254 milliseconds processing keywords in comments only.

After eliminating the calls to hook_comment() in the module, the total load time for the node was 3,122 milliseconds.

Conclusion

So, always look at the size of your dataset, as well as the frequency of resource intensive or slow operations. They could be bottlenecks for your application.

Comments

Eric Mulder (not verified)

Use querycache and memcache

Thu, 2013/04/25 - 02:13Great article! If you are able to change the code which is causing the high load on MySQL this is absolutely the way to go.

If you are not so lucky and experience high loads on your database server, you should take a look at your database configuration. Options like the table_cache and query_cache will really help the performance of queries that are called often. In addition to that you can use memcache which acts as data storage cache between Drupal and MySQL. Since queries to the servers RAM are less CPU intensive compared to hard disk operations you will soon see a decrease in your server load. Clearly your server will require more RAM.

Adding extra caching is not always the best solution. Don't use it as a bandage to fix your performance issues. As this article describes, look at 'your' code where possible and perform massive surgery.